This token points towards the corresponding value in the existing data.

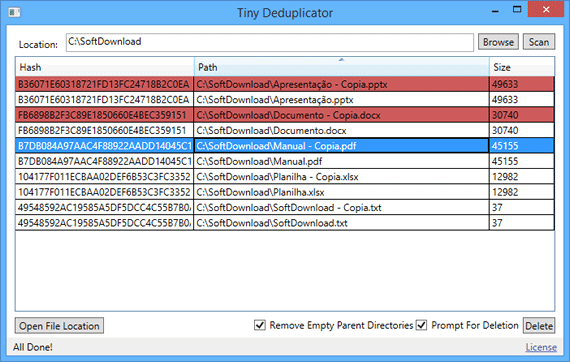

Tokenization: A token replaces the duplicate value.It then passes the deduplicated version of the data to the destination repository. Removal: The ETL process deletes the duplicate value.If the process detects a duplicate, it will take one of the following actions: The process then compares the staging layer data to other available sources. The ETL process holds data in a staging layer after import. ETL Processĭeduplication is one of the functions of the transformation process in ETL ( Extract, Transform, Load ). Query-based deduplication is generally for fine-tuning a database, rather than make large-scale efficiency improvements. This kind of deduplication can also happen as part of the post-processing, which is the cleansing process a data owner performs after data has moved from the target source to its destination. Organizations generally perform this kind of deduplication manually, through a stored query, or with a batch file. These can be removed using a query or a script, as long as they are truly repeating. Within a relational database, individual rows may contain repeating values. There are several ways of performing data deduplication, depending on the nature of the task. Deduplication is the process of removing these redundancies so that the organization has reliable backups with as little repetition as possible. If 99% of each backup is unchanged, then up to 361 GB of that storage is wasted.Īs these redundancies scale up, they result in higher storage costs and slower query results. If the size of the export is 1 GB, then the organization will end up with 365 GB of backup data after a year. The organization will likely choose to back up the CRM data regularly, perhaps every day. For this company, an average of 1% of their customer details changes each day, while the other 99% remain the same. For example, consider an organization that has a production system such as a CRM. In some circumstances, duplicated data can start to affect performance by slowing down query results.ĭata duplication can happen on a large scale in some processes, such as data backups. As data volumes expand, data costs increase.ĭuplicated data has no value for the owner, yet it still costs money. There are storage costs for holding data, and then there are processing costs for querying data. Why is Deduplication Important?ĭata has a monetary cost for its owner. This process helps speed up processes such as backups, and other processes that may result in large-scale repetition of data values. Deduplication is a method of removing duplicate values from data.

0 kommentar(er)

0 kommentar(er)